Something that I’ve become more concerned about in our household is our consumption of so-called ‘ultra-processed food’. My wife has had a few health issues over the past 18 months, including an elevated risk of developing type two diabetes which has seen her cut her sugar intake. But this coincided with the publishing of several books related to ultra-processed food, and has seen us made some changes to reduce our exposure to them.

The books

Before I go into much detail, here are the books I’m talking about:

- Ultra-Processed People by Dr Chris van Tulleken

- The Way We Eat Now by Dr Bee Wilson

- Ravenous by Henry Dimbleby

Note: these are sponsored links, but feel free to purchase these books from your local independent tax-paying bookshop, or borrow them from a library.

If you only read one of these, read Chris van Tulleken’s Ultra-Processed People. Chris is probably better known as ‘Dr Chris’ from the CBBC show Operation Ouch, which he presents with his twin brother Dr Xand (and later Dr Ronx). He’s a triple-threat: a GP who is also a research scientist and a TV presenter, and it shows. He’s able to digest some academic research into an easily readable format, which isn’t surprising when you consider that this is what he does for his patients and his TV audience. But it also means that there’s academic rigour behind this book.

Dr Xand pops up quite a bit in this book; Chris and Xand are identical twins but have different physiques. Chris puts this down to Xand’s time in the USA, where he was exposed to higher amounts of so-called ‘ultra-processed food’, and so he’s ended up higher on the BMI scale than his brother (although Chris acknowledges that BMI is discredited). When they both contracted Covid-19 in 2020, Xand was more seriously ill than Chris.

Over the course of the book, we discover that there’s increasing evidence that ultra-processed food is linked to obesity, and how the food industry tries to downplay it.

How do we define ultra-processed food?

Chris acknowledges that it can be hard to define what ultra-processed food is. The best model that we have is the Nova classification, developed by Prof Carlos Augusto Monteiro at the University of Sao Paulo in Brazil. Essentially, this splits food into 4 groups:

- Nova 1 – wholefoods, like fruit, vegetables, nuts etc that can be eaten with little to no processing

- Nova 2 – culinaries, like vinegar, oils, butter and salt that require some processing

- Nova 3 – processed food. This is basically anything that’s been cooked, so home-made bread would fall under here. Foods from the Nova 1 and 2 categories are combined to create the foods in the Nova 3 category.

- Nova 4 – ultra-processed food, which is made from formulations of food additives that may not include any ingredients from the Nova 1 category.

Probably the easiest way to work out if something fits into the Nova 4 category is by looking at the list of ingredients. If there are one or more ingredients listed that you can’t expect to find at a typical large supermarket, then it’s probably ultra-processed food. Things like emulsifiers, artificial sweeteners, preservatives and ingredients identified only using those dreaded E numbers that my mum used to be wary of back in the 1980s.

And there’s a lot of food that fall into the Nova 4 category. Almost all breakfast cereals, and any bread that is made in a factory, are examples.

Why are ultra-processed foods so common?

Fundamentally it’s to do with cost and distribution. For example, a tin of tomatoes that contains some additional ultra-processed ingredients may be cheaper than a tin just containing tomatoes (and perhaps a small amount of acidity regulator). It’s a bit like how drug dealers cut drugs with, for example, flour, to make more money when selling their drugs on.

Distribution is also a factor. A loaf of bread that is baked in a factory may take a couple of days to reach supermarket shelves, where it also needs to be ‘fresh’ for a few days. So the manufacturers will add various preservatives and ingredients to ensure that bread remains soft.

You can bake your own bread using only yeast, flour, salt, olive oil and water. But Tesco will sell you a loaf of Hovis white bread that also contains ‘Soya Flour, Preservative: E282, Emulsifiers: E477e, E471, E481, Rapeseed Oil, and Flour Treatment Agent: Ascorbic Acid’. These are to keep the bread soft and extend its shelf life, as a homemade loaf may start going stale after 2-3 days. This means that a shop-bought loaf may go mouldy before it goes stale.

Other common examples

Breakfast cereals brand themselves as a healthy start to the day, but often contain worryingly-high amounts of sugar. And there’s evidence that their over-use of ultra-processed ingredients interferes with the body’s ability to regulate appetite, leading to over-eating.

Ice cream is also often ultra-processed, if you buy it in a supermarket. The extra additives ensure that it can survive being stored at varying temperatures whilst in transit. It’s notable that most UK ice cream is manufactured by just two companies – Froneri (Nestlé, Cadbury’s, Kelly’s, Häagen-Dasz and Mövenpick brands) and Unilever (Walls and Ben & Jerry’s). There are many small ice cream producers, but the challenge of transporting ice cream and keeping it at the right temperature means that they have limited reach.

I’m also worried about a lot of newer ‘plant-based’ foods that are designed to have the same taste and texture as meat and dairy products. You can eat a very healthy plant-based diet, but I would argue that some ultra-processed plant-based foods would be less healthy that the meat and dairy products that they’re mimicking.

What we’re doing to cut our intake of ultra-processed food

We now bake our own bread in a bread machine. Not only do you avoid ultra-processed ingredients, but freshly-baked bread tastes so much nicer than a loaf bought in a shop. It takes a little more planning, but most of the ingredients don’t need to be kept fresh.

We also buy more premium products where we can. Rather than refined vegetable oils, we buy cold-pressed oil for frying, and I’ve mentioned chopped tomatoes above. Of course, these products cost more, and it’s something that both Chris and Henry mention in their books. It should come as no surprise that there’s a link between obesity and poverty, if people on low incomes cannot afford good food.

And we’ve had to give up Pringles. Chris devotes practically a whole chapter to them, and how they trick the brain into wanting more.

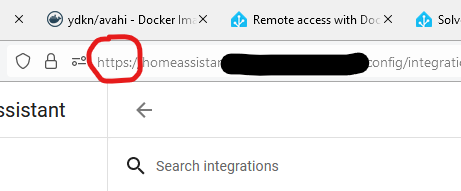

You can download the Open Food Facts app to help decipher food labels. It includes a barcode scanner, and will warn you if what you’ve scanned is ultra-processed food. The good news is that there are still plenty of convenience foods which are not ultra-processed – there’s some suggestions in this Guardian article.

Whilst I haven’t yet given up on artificially-sweetened soft drinks, we reckon that we’ve cut our sugar intake and our exposure to artificial additives. In many cases, we don’t know the long-term health effects of these additives, although we do know that some people struggle to lose weight despite eating a supposedly ‘healthy’ diet and exercising regularly.