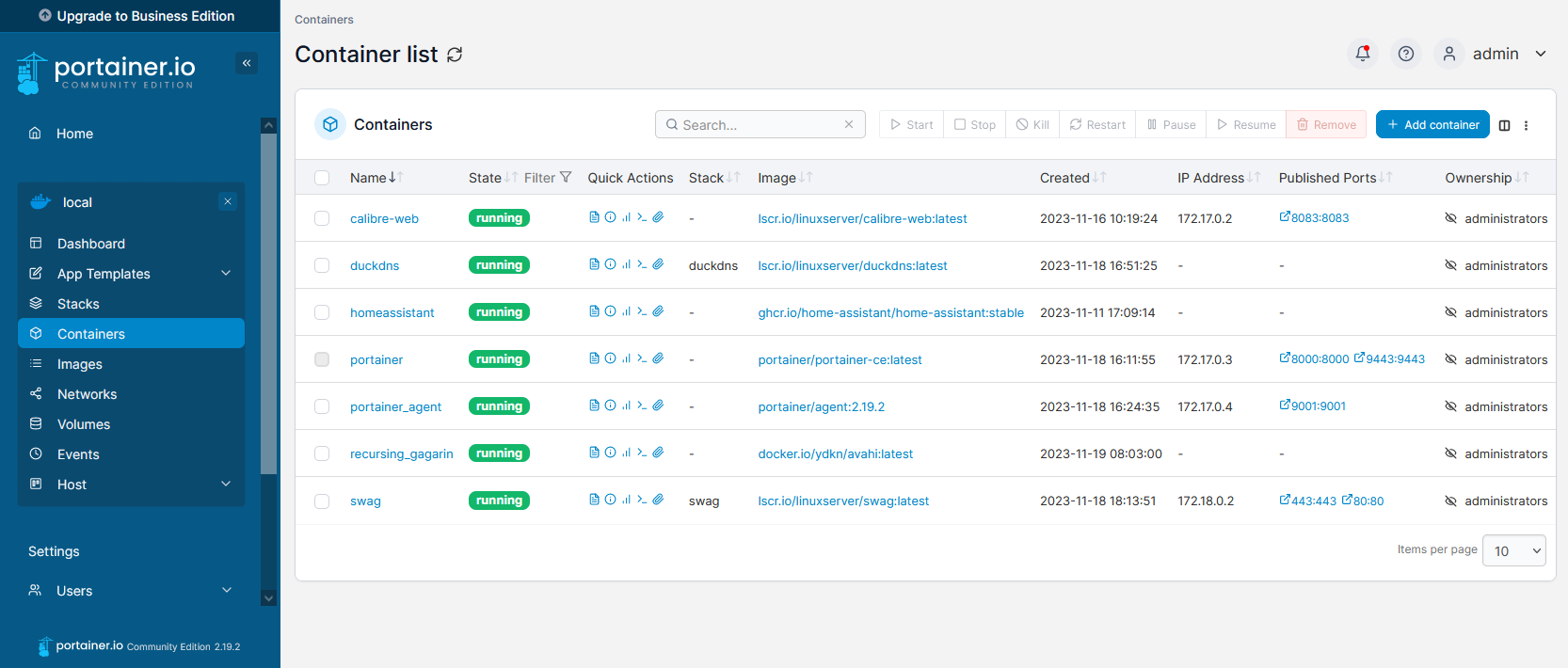

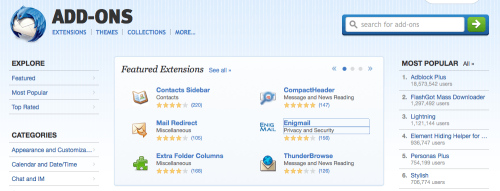

It’s been some time since I used Mozilla Thunderbird at home – I switched to Sparrow, then Apple’s own Mail app, before settling on Airmail last year. But at work, where I deal with a high volume of email, I prefer to use Thunderbird, instead of the provided Outlook 2010. There are a few add-ons which help me get stuff done, and so here is my list:

Lightning

Unlike Outlook, Lotus Notes or Evolution, Thunderbird doesn’t ship with a calendar. Lightning is an official Mozilla extension which adds a reasonably good calendar pane. Calendars can be local, subscribed .ics files on the internet, or there’s basic CalDAV support as well, and it works well with multiple calendars. A ‘Today’ panel shows up in your email pane so you can quickly glance at upcoming appointments.

Once you have Lightning installed, there are some other calendar extensions you can add. Some people use the Provider for Google Calendar extension – I don’t, as nowadays Google Calendar supports CalDAV so there’s no need for it. If you need access to Exchange calendars, then there’s also a Provider for Exchange extension too, although as we’re not (yet) on an Exchange system at work I haven’t yet tried this.

There’s also ThunderBirthDay, which shows the birthdays of your contacts as a calendar.

Google Contacts

If you use Gmail and its online address book to synchronise your contacts between devices, then Google Contacts will put these contacts in Thunderbird’s address book. It doesn’t require much setup – if you’ve already set up a Gmail account in Thunderbird then it’ll use those settings.

This is probably of most interest to Windows and Linux users. On Mac OS X, Thunderbird can read (and write, I think) to the global OS X Address Book, which can be synchronised with Google Contacts and therefore this extension isn’t needed. In the past, I used the Zindus extension for this purpose but it’s no longer under development.

Mail Redirect

This is a feature that older email clients like Eudora had, which allowed you to redirect a message to someone else, leaving the message intact. Mail Redirect adds this is a function in Thunderbird.

It’s different to forwarding, where you quote the original message or send it as an attachment – with Redirect, the email appears in the new recipient’s inbox in almost exactly the same way as it did in yours. That way, if the new recipient replies, the reply goes to the sender and not to you.

Thunderbird Conversations

If you like the way that Gmail groups email conversations together in the reading pane, then Thunderbird Conversations is for you. It replaces the standard reading pane, showing any replies, and messages that you have sent – even if they’re in a different folder. You can also use it to compose quick replies from the reading pane rather than opening a new window.

LookOut

Although this extension apparently no longer works, LookOut should improve compatibility with emails sent from Microsoft Outlook – especially older versions. Sometimes, attachments get encapsulated in a ‘winmail.dat’ file, which Thunderbird doesn’t understand. LookOut will make these attachments available to download as regular files. Hopefully someone will come along and fix it, but there hasn’t been an update since 2011 so I’m guessing this extension has been abandoned.

Smiley Fixer

Another add-on that will make working alongside Outlook-using colleagues a bit easier. If you’ve ever received emails with a capital letter ‘J’ at the end of a sentence, then this is Microsoft Outlook converting a smiley  into a character from the Wingdings font. Thunderbird doesn’t really understand this and just displays ‘J’, which is where Smiley Fixer comes in. It will also correct a few other symbols, such as arrows, but you may still see the occasional odd letter in people’s signatures.

into a character from the Wingdings font. Thunderbird doesn’t really understand this and just displays ‘J’, which is where Smiley Fixer comes in. It will also correct a few other symbols, such as arrows, but you may still see the occasional odd letter in people’s signatures.

Enigmail

If you use GnuPG to encrypt messages, then you’ll probably have the Enigmail extension installed. Though it originally was a pain to set up, nowadays it seems to work quite well without a lot of technical knowledge. It includes a listing of all of the keys in your keychain, and you can ask it to obtain public keys for everyone in your address book should you wish.

Dropbox for Filelink

Some time ago a feature called ‘Filelink’ was added to Thunderbird, which allowed you to send links to large files, rather than including them as attachments. Whilst most people nowadays have very generous storage limits for their email, sometimes it’s best not to send large files as email attachments. Thunderbird supports Box and the soon-to-be-discontinued Ubuntu One services by default, but you can use the Dropbox for Filelink extension to add the more popular Dropbox service. Another extension will add any service which supports WebDAV which may be helpful if you’re in a corporate environment and don’t want to host files externally.

These are the extensions that I use to get the most out of Thunderbird. Although I’ve tried using Outlook 2010, I still prefer Thunderbird as it’s more flexible and can be set up how I want it.